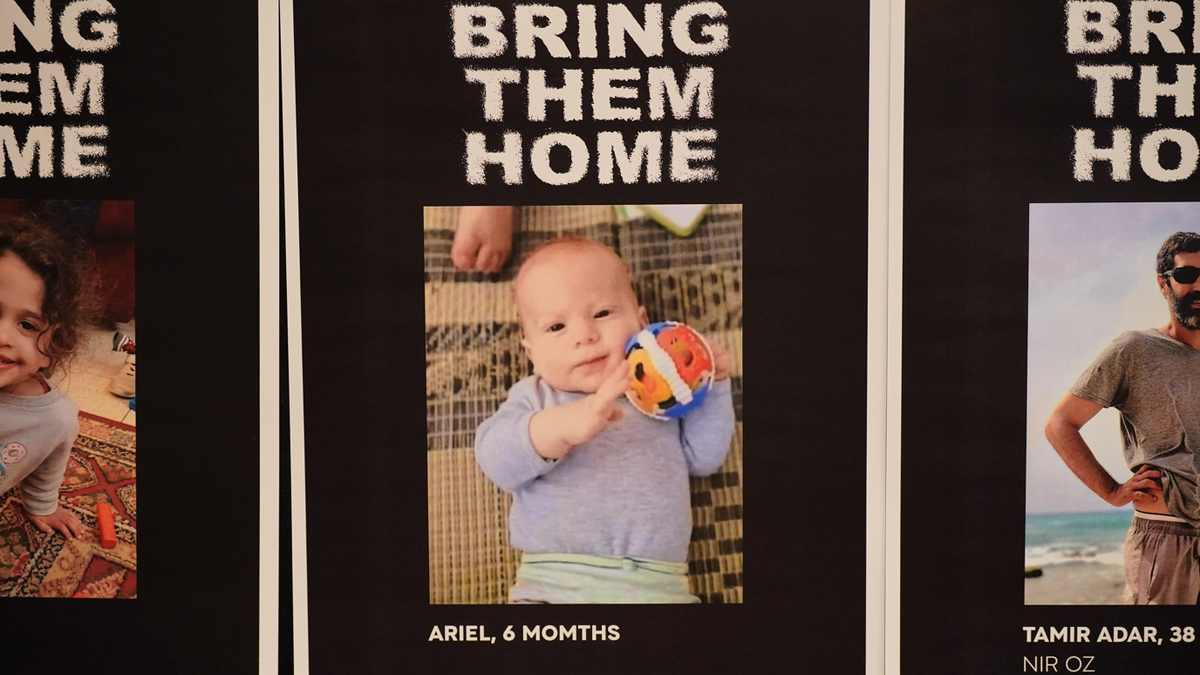

Artificial intelligence is increasingly being weaponized by criminals to execute sophisticated scams. One particularly disturbing trend involves using AI to clone voices and create fake hostage situations, preying on parents' fears. These scams often leverage information readily available on social media platforms like TikTok, Facebook, and Snapchat to make the impersonations incredibly convincing.

One Georgia mother, Debbie Shelton Moore, shared her harrowing experience with 11Alive, an Atlanta NBC affiliate. She received a call seemingly from her 22-year-old daughter, pleading for help. A man then took the phone, demanding a $50,000 ransom. The daughter's cries could be heard in the background, adding to the chilling realism of the scam.

Moore recounted the sheer panic she felt, convinced it was her daughter's voice. The scammers even used a local area code to further their deception. Fortunately, her husband, who works in cybersecurity, was able to quickly expose the scheme by contacting their daughter directly.

This incident isn't isolated. A May McAfee survey of 7,000 individuals revealed that one in four people have either experienced or know someone who has experienced an AI voice cloning scam. Similar incidents have been reported in Arizona, where families were targeted with ransom demands based on AI-generated voices of loved ones.

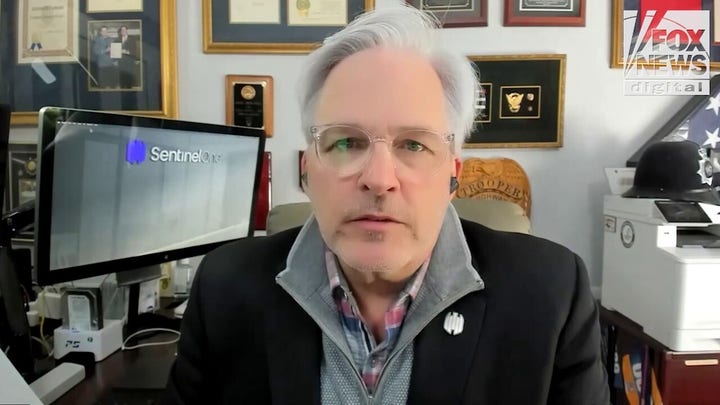

These scams highlight the ease with which AI tools can replicate human voices from short audio clips available online. While AI holds immense potential, its misuse in such criminal activities poses a serious threat. Law enforcement agencies, like the Cobb County Sheriff's Office, are now advising families to establish safe words or phrases to verify identities in potentially dangerous situations. This precautionary measure can help individuals think clearly under duress and avoid falling victim to these technologically advanced scams.